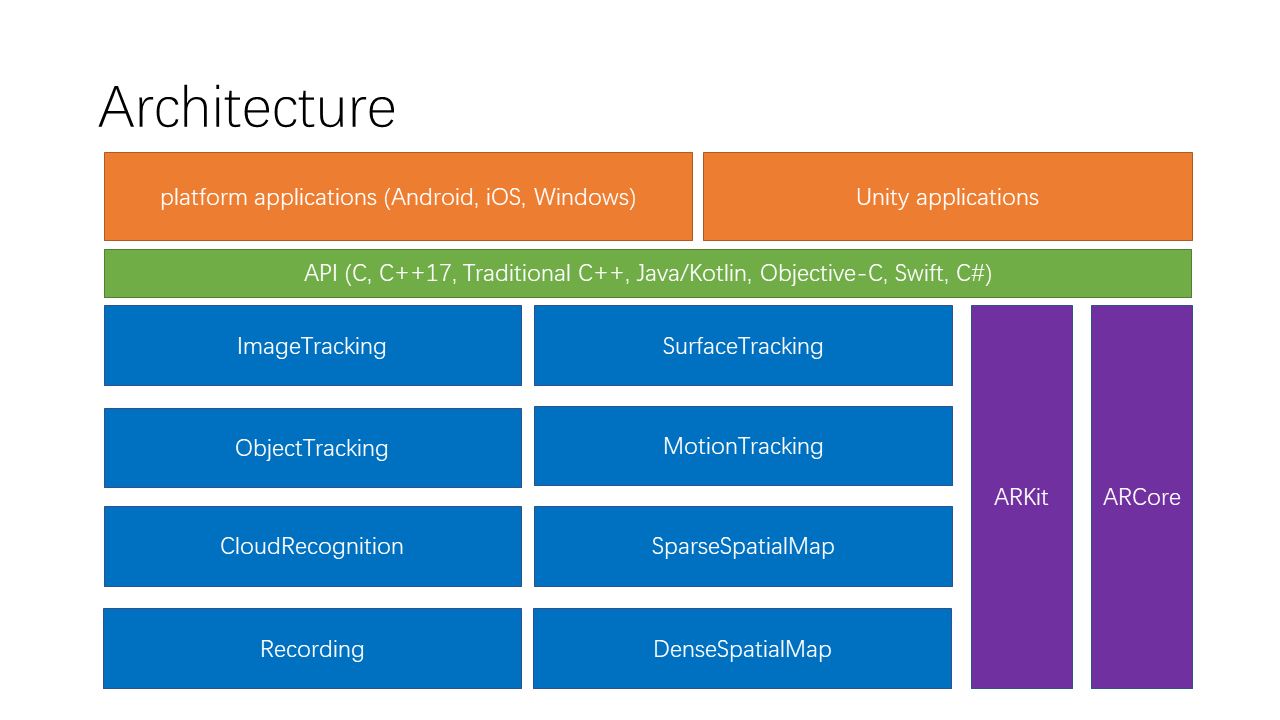

API Overview¶

This article introduces the API architecture of EasyAR Sense 4.0.

The EasyAR Sense API in version 3.0/4.0 are restructured into components based on dataflow, making EasyAR Sense easier to connect to other system to meet more flexible requirements.

Structure¶

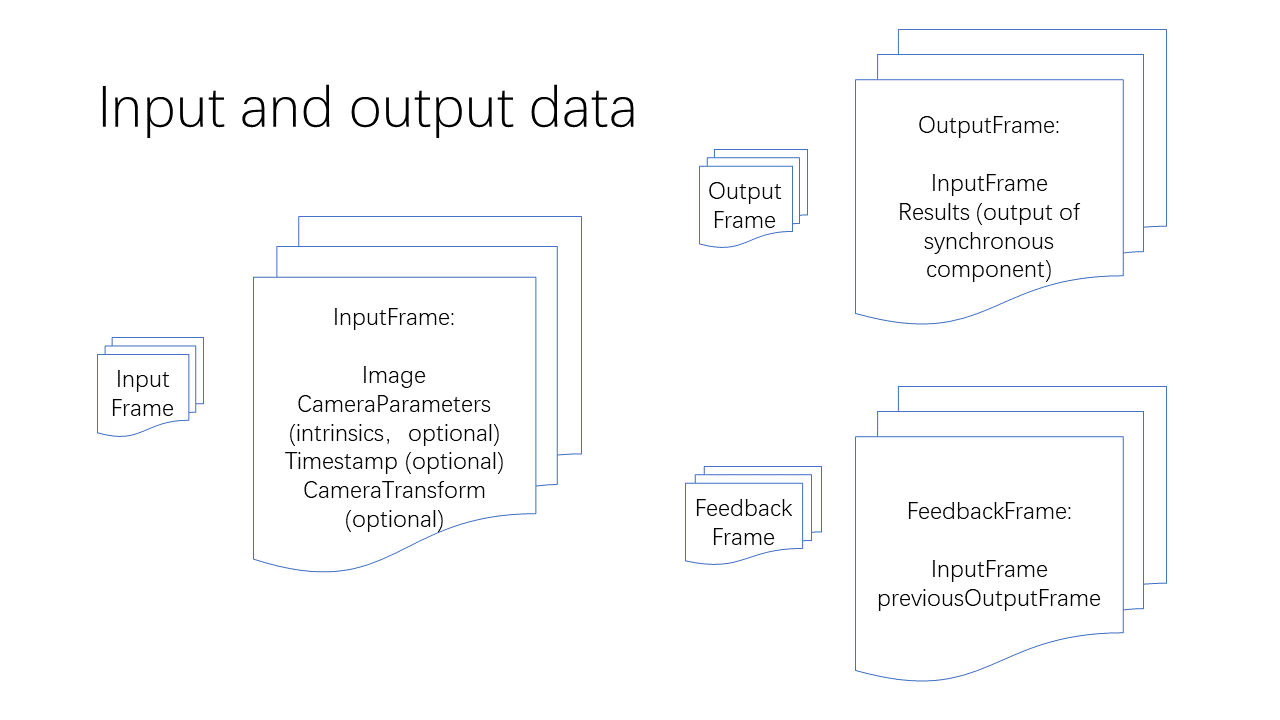

Input and output data¶

InputFrame : Input frame. It includes image, camera parameters, timestamp, camera transform matrix against world coordinate system, and tracking status, among which, camera parameters, timestamp, camera transform matrix and tracking status are all optional, but specific algorithms may have special requirements on the input.

OutputFrame : Output frame. It includes input frame and results of synchronous components.

FeedbackFrame : Feedback frame. It includes an input frame and a historic output frame for use in feedback synchronous components such as ImageTracker .

Camera components¶

CameraDevice : The default camera implementation on Windows, Mac, iOS and Android.

ARKitCameraDevice : The default implementation of ARKit on iOS.

ARCoreCameraDevice : The default implementation of ARCore on Android.

MotionTrackerCameraDevice :It implements motion tracking, which calculates 6DOF coordinates of device based on multi-sensor fusion. (Android-only)

custom camera device: Custom camera implementation.

Algorithm components¶

Feedback synchronous components: They output results with every input frame from the camera and require the most recent historic output frame results to avoid mutual interference.

ImageTracker : It implements image target detection and tracking.

ObjectTracker It implements 3D object target detection and tracking.

Synchronous components: They output results with every input frame from the camera.

SurfaceTracker :It implements tracking with environmental surfaces.

SparseSpatialMap :It implements sparse spatial map, which provides the ability to scan physical space and generate point cloud map for real-time localization. (4.0 version functionality)

Asynchronous components: They do not output results with every input frame from the camera.

CloudRecognizer :It implements cloud recognition.

DenseSpatialMap :It implements dense spatial map, which can be used in collision detection, occlusion, etc. (4.0 version functionality)

Component availability check¶

There is an isAvailable method in every component, which can be used to check the availability.

The situations for a component to be unavailable includes:

There is no implementation on the current operating system.

A dependency can not be found, such as ARKit or ARCore.

The component does not exist in the current variant. For example, functionalities may be missing in some reduced variants.

The component can not be used under current license.

Be sure to check the availability of a component before use, and do fallback or prompt if not available.

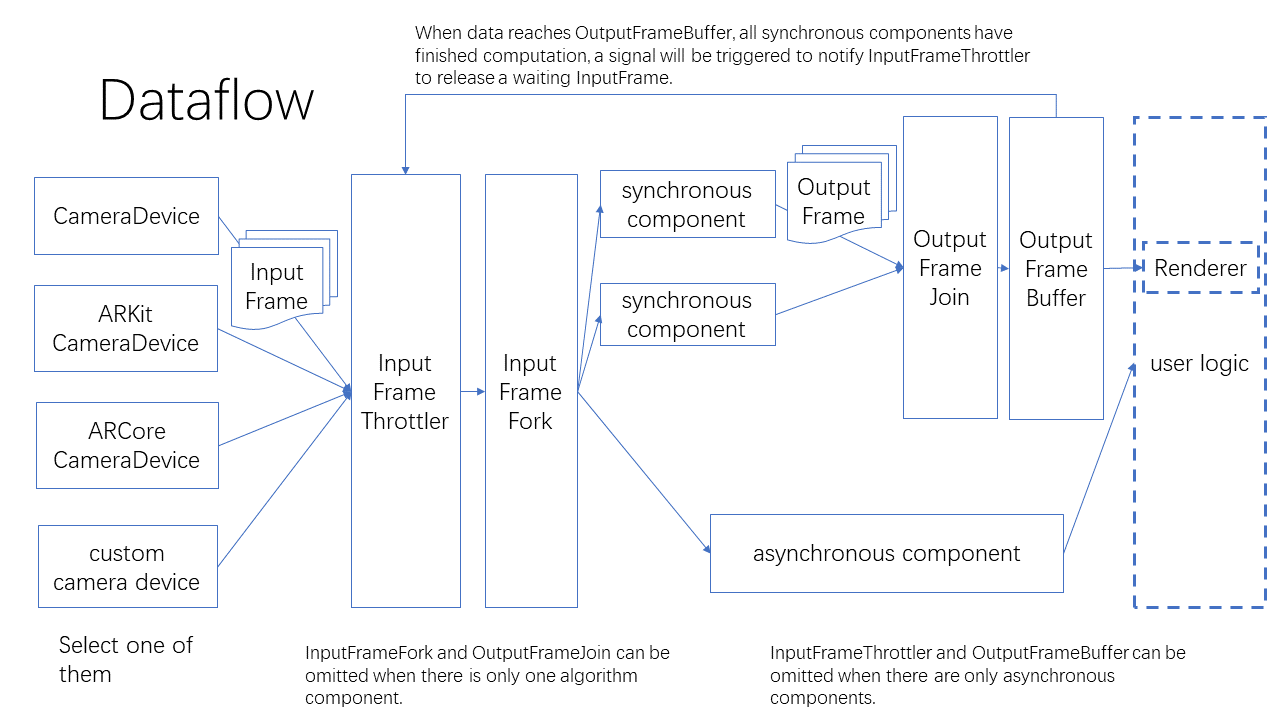

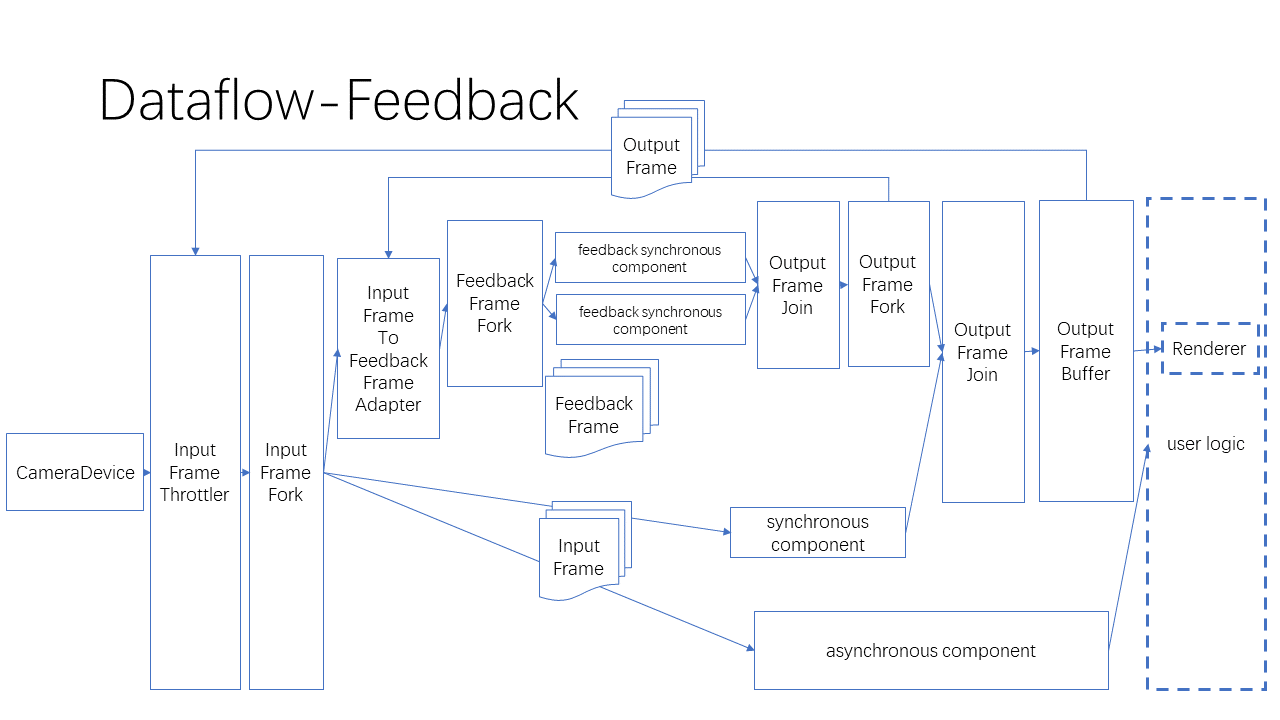

Dataflow¶

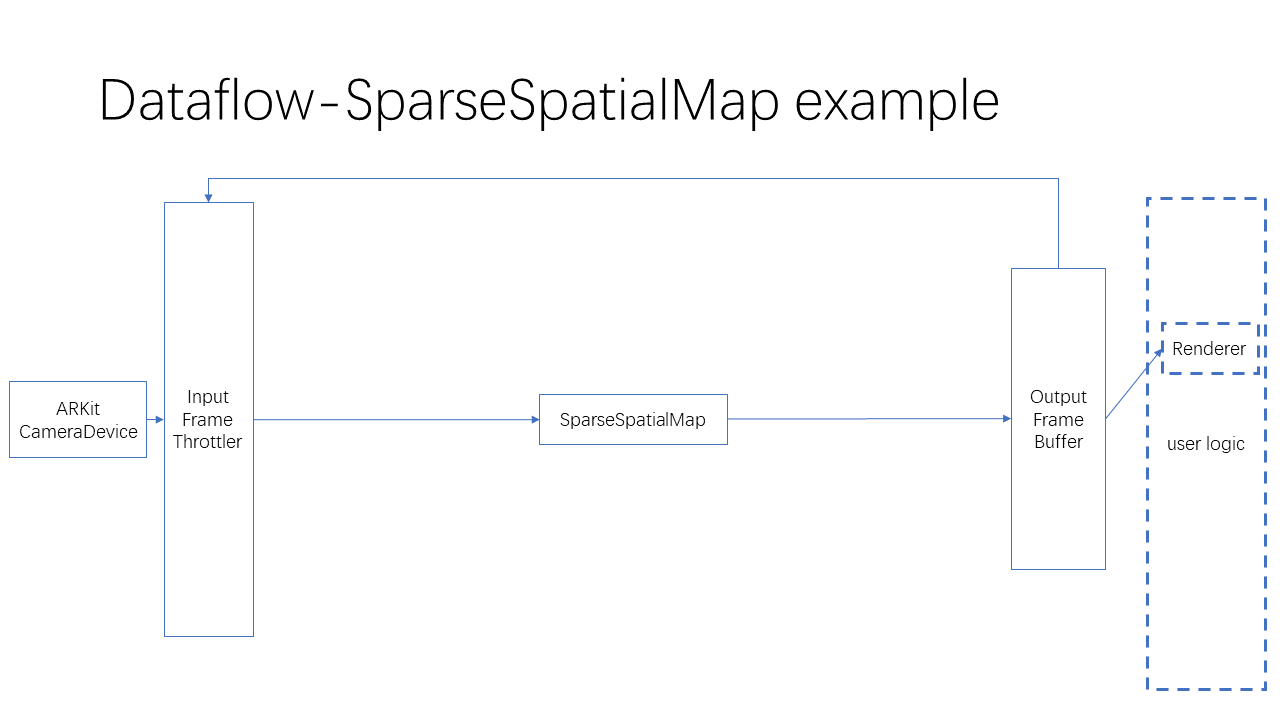

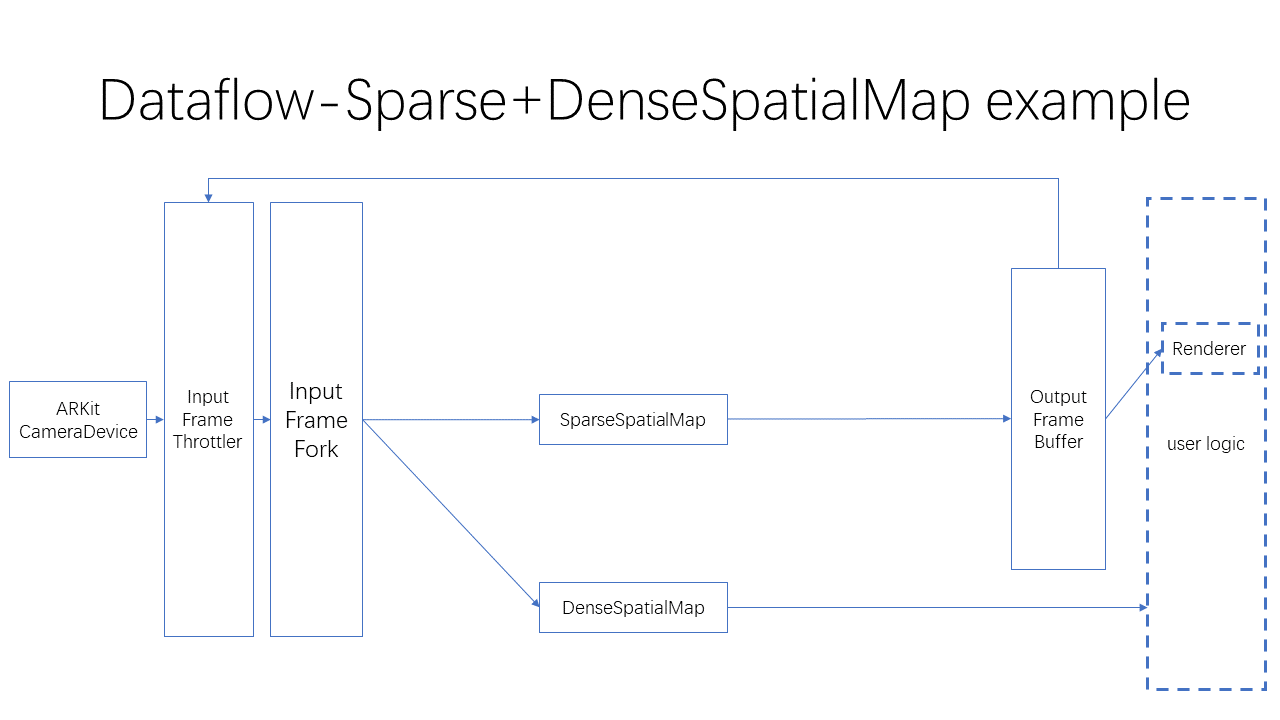

The connection of components is shown in the following diagrams.

Dataflow utility classes¶

Sending and receiving ports of dataflow, which is included in components.

SignalSink / SignalSource :Receiving/sending signal (without data).

InputFrameSink / InputFrameSource :Receiving/sending InputFrame .

OutputFrameSink / OutputFrameSource :Receiving/sending OutputFrame .

FeedbackFrameSink / FeedbackFrameSource :Receiving/sending FeedbackFrame .

Branching and merging of dataflow

InputFrameFork :Branching InputFrame in parallel.

OutputFrameFork :Branching OutputFrame in parallel.

OutputFrameJoin :Merging multiple OutputFrame into one with all results into Results. It shall be noticed that connections to the inputs shall not be performed during the flowing of data, or it may stuck in a state that no frame can be output. (It is recommended to complete dataflow connection before start a camera.)

FeedbackFrameFork :Branching FeedbackFrame in parallel.

Throttling and buffering of dataflow

InputFrameThrottler :Receiving and sending InputFrame one by one. Only when a signal is received, the next InputFrame will be sent. If multiple InputFrame is received, later InputFrame may overwrite existing InputFrame .

OutputFrameBuffer :Receiving and buffering OutputFrame for polling. It will send a signal after received OutputFrame .

Connect the signal from OutputFrameBuffer to InputFrameThrottler will complete the throttling.

Dataflow conversion

InputFrameToOutputFrameAdapter :Wrapping a InputFrame into OutputFrame for rendering.

InputFrameToFeedbackFrameAdapter :Wrapping a InputFrame and a FeedbackFrame into FeedbackFrame for use in feedback synchronous components.

InputFrame count limitation¶

For CameraDevice buffer capacity can be adjusted. It is the maximum amount of outstanding InputFrame . The default value is 8.

For custom cameras, BufferPool can be used to implement similar functionality.

For the amount of InputFrame holding in each component, refer to API document of the specific component.

If buffer capacity is not enough, the dataflow may stuck, which will result a stuck in rendering.

Even when buffer capacity is not enough, it may not stuck on boot but stuck after switching out and in from the background or after pause/resume of components. This shall be covered in tests.

Connections and disconnections¶

It is not recommended to connect or disconnect while the dataflow is running.

If it is necessary to do a connection or disconnection while the dataflow is running, be cautious that connection and disconnection shall only be performed on cut edges, rather than edges of an acyclic loop, input of OutputFrameJoin or sideInput of InputFrameThrottler , otherwise the dataflow may stuck in a state that no frame can be output from OutputFrameJoin or InputFrameThrottler .

Every algorithm component has the start/stop functionality. When the component is stopped, frames will not be handled, but they will still be output from the component without results.

Typical usages¶

Supported languages¶

EasyAR Sense supports the following languages.

C

Supported for C99 and Visual C++, gcc, clang

C++

Supported for C++17 and Visual C++, gcc, clang

The only C++17 feature in use is std::optional. If you need to use EasyAR Sense on C++11, you can use optional lite , and in the interface header, replace std::optional with nonstd::optional and #include <optional> with #include “nonstd/optional.hpp” .

Java

Only supported on Android for Java SE 6 and later.

Kotlin

Only supported on Android.

Objective-C

Only supported on iOS/MacOS.

Swift

Only for Swift 4.2 and later.

C#

Supported for .Net Framework 3.5 and later, .Net Core, Mono, Unity/Mono, Unity/IL2CPP

Thread-safety model¶

For each class without further notice, all static members are thread-safe.

For each class without further notice, all instance members are thread-safe, if and only if they are accessed with external locking.

For each class without further notice, its destructor can be called from any thread in a thread-safe way after completion of all other calls to this object.

Memory model¶

EasyAR Sense use C++ std::shared_ptr for reference counting internally, but unfortunately reference counting is essentially incompatible with garbage collection, and they can not be bridged seamlessly.

For C, C#, and Java/Kotlin,

Manual reference counting is required that dispose shall be called to release a reference, and clone shall be used to copy a reference.

It shall be noticed particularly that callback arguments from EasyAR Sense will be released after the invocation. To preserve an argument, a clone is required. The automatic release is to support callback without any logic code.

It shall be noticed that use EasyAR Sense objects in EasyAR Sense callbacks may cause circular reference. These objects has to be treated as resources (like files or operating system handles) and released manually to prevent memory leak.

For C++, Objective-C, and Swift

The language built-in reference counting are used.

It shall be noticed that use EasyAR Sense objects in EasyAR Sense callbacks may cause circular reference. Use reference capture, std::weak_ptr, etc in a reasonable way to prevent memory leak.

Character encoding¶

Character encodings used in API are as follows.

For C, C++

UTF-8

For Java, Kotlin, Objective-C, C#

UTF-16

Swift

UTF-16 or UTF-8, see Swift documentation

Vectors and coordinate systems¶

Unless otherwise specified, the following convention applies.

All vectors are column vectors.

All matrices are row-major. (Unlike OpenGL’s column-major)

All coordinate systems are right-handed. (The same as OpenGL)

Coordinate systems with physical scales are measured in meters.

The negative Y-axis of world coordinate system is in the direction of gravity of earth.

The device coordinate system is that x-right, y-up, and z point out of screen. For a device with a rotatable screen, right and up is defined relative to its default orientation. Particularly, on Android the default orientation is defined the same as the operating system (Eye-wear and some tablets default to landscape, phones and some tablets default to portrait), and on iOS the default orientation is defined as portrait. (The same as in IMU descriptions in Android and iOS.)