Start from Zero¶

This article will describe how to quickly create an AR application using EasyAR Sense Unity Plugin.

Preparation¶

Prepare Unity Environment¶

Read Platform Requirements to learn system and Unity versions EasyAR Sense Unity Plugin support and get Unity packages from the Unity website.

If it is your first time, suggest using LTS Releases from Unity.

Prepare Package¶

Get latest EasyAR Sense Unity Plugin package from download page.

Get Licensing¶

Before using EasyAR Sense, you need to register on www.easyar.com and get a license key .

Create Project¶

Create Empty Unity Project¶

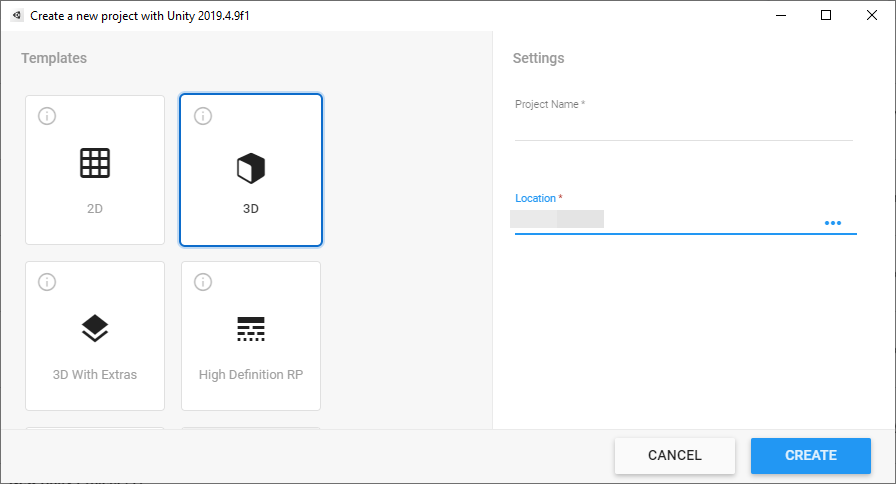

Choose 3D template When create a project. If you are using URP, you may reference Universal Render Pipeline (URP) Configuration for Configuration.

Add Plugin Package¶

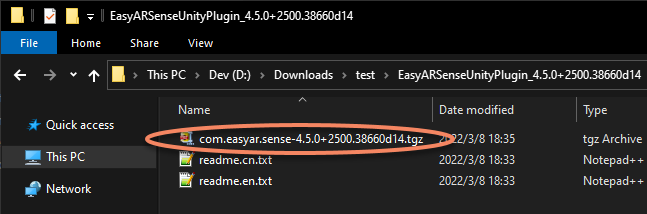

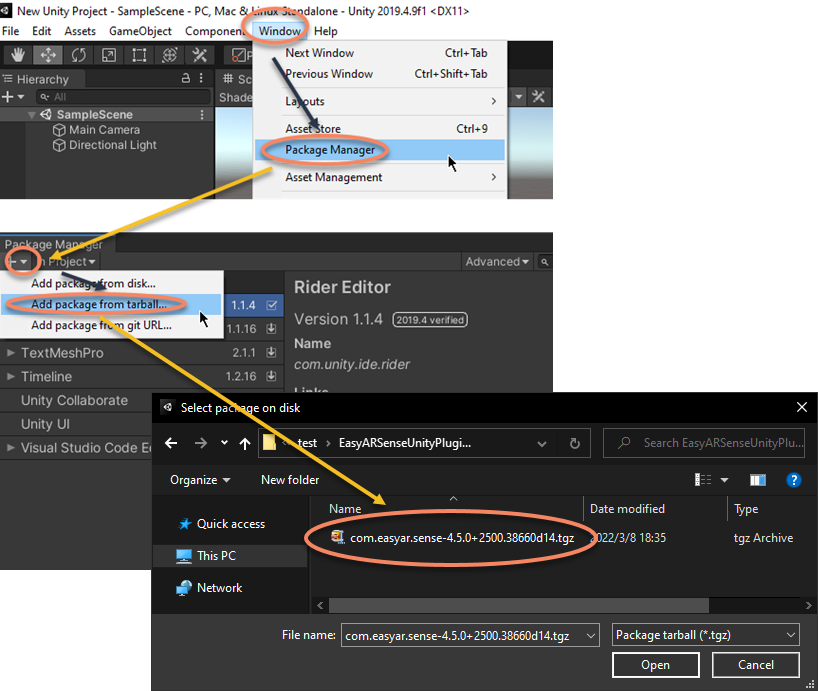

The plugin use Unity’s package to organize itself. It is distributed as a tarball file. The release package is a zip file, and you will get readme files and a tgz file after extracting the zip file. Do not extract the tgz file.

It is suggested to put above tgz file inside the project, like in the Packages folder. Use Unity’s Package Manager window to install the plugin from a local tarball file.

Choose the com.easyar.sense-*.tgz file in the popup dialogue.

NOTE: The tgz file cannot be deleted or moved to another place after import, so it is always a good practice to find a suitable place for the file before import. If you want to share the project with others, you can put the file inside the project and your version control system.

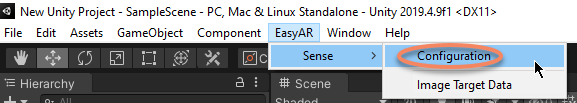

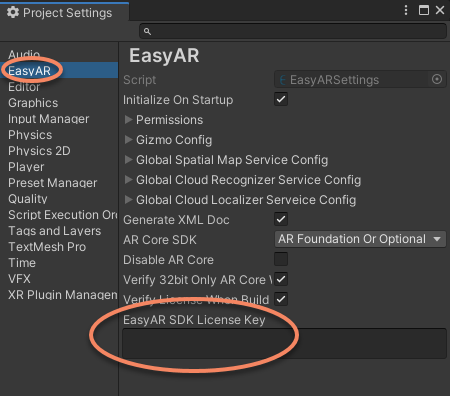

Fill in License Key¶

Choose EasyAR > Sense > Configuration from Unity menu and fill in the License Key in the Project Settings. This page can be also opened from Edit > Project Settings > EasyAR.

Create a Scene with a Camera¶

Create a scene or use the automatically created scene by the project and ensure there is a Camera in the scene.

NOTE: If you are using AR Foundation , a separated Camera like above is not required. You need to reference Working with AR Foundation for more details about how to setting up a scene before going to the next step.

NOTE: If you are using AR Engine Unity SDK , a separated Camera like above is not required. You need to reference Working with Huawei AR Engine for more details about how to setting up a scene before going to the next step.

NOTE: If you are using Nreal SDK , a separated Camera like above is not required. You need to reference Working with Nreal Devices for more details about how to setting up a scene before going to the next step.

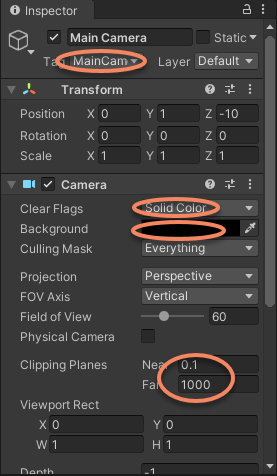

Setup Camera (if you are using AR Foundation or AR Engine Unity SDK or Nreal SDK, these values are usually pre-set by those packages),

Tag: If the camera is not from

AR FoundationorAR Engine Unity SDKorNreal SDK, you can set Camera Tag to MainCamera so that it can be picked by AR Session frame source when it starts. Alternatively, you can set Camera of FrameSource to this camera by changing FrameSource.Camera in its inspector.Clear Flags: Need to be Solid Color so that the camera image can be rendered normally. The camera image would not show when using Skybox.

Background: This is not a must but set the background to black would increase user experience before the camera opens or when switching different cameras.

Clipping Planes: Set according to the physical distance in real world. It is set to 0.1 (m) here so the object will not be clipped away when the camera device is near the object.

AR Foundation usually sets its clipping planes to (0.1, 20) , this may clip objects away displayed more than 20 meters from the Camera (the device in real world). Please make sure to change its value for your needs before you use them.

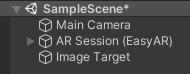

Create EasyAR AR Session¶

You can create AR Session using presets or Node-by-Node.

Create AR Session Using Presets¶

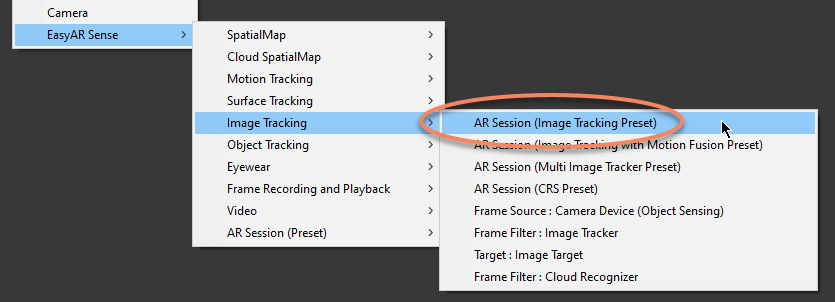

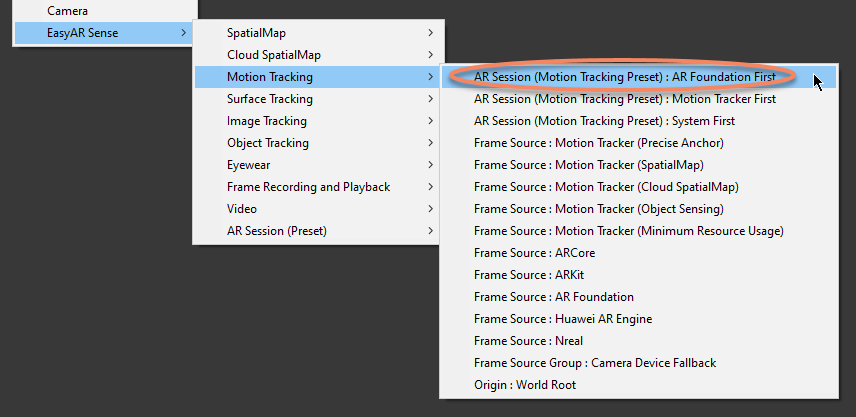

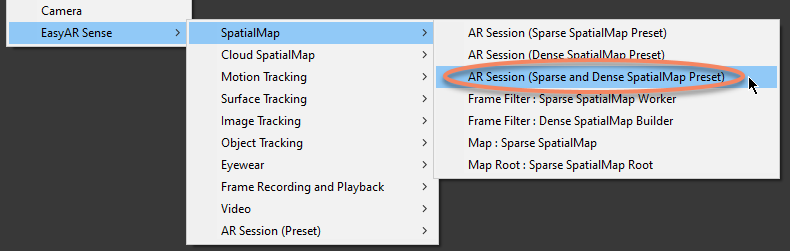

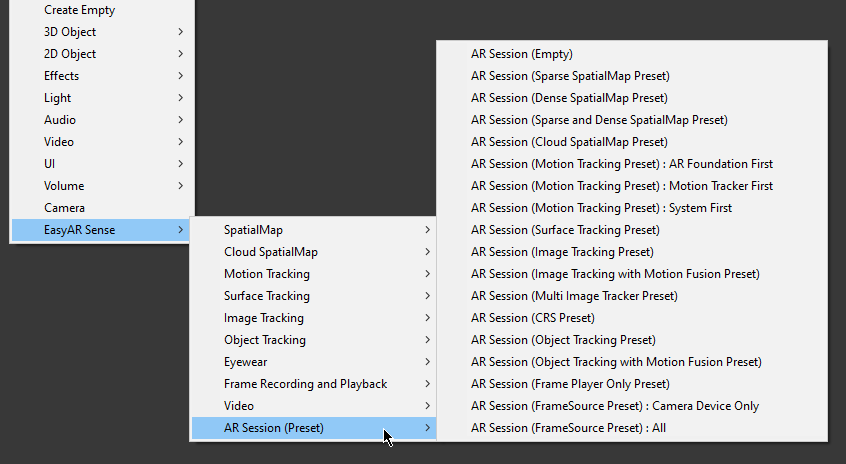

There are many presets in the GameObject menu for convenience, you can use them in most of the cases,

For example, if you want to use Image Tracking, you can create AR Session by EasyAR Sense > Image Tracking > AR Session (Image Tracking Preset).

If you want to use Motion Tracking in a typical ARCore or ARKit like usage, you can create AR Session by EasyAR Sense > Motion Tracking > AR Session (Motion Tracking Preset) : AR Foundation First.

If you want to build Sparse SpatialMap and Dense SpatialMap at the same time, you can create AR Session by EasyAR Sense > SpatialMap > AR Session (Sparse and Dense SpatialMap Preset).

The menu EasyAR Sense > AR Session (Preset) collects all presets together, if there are two presets of same name in both collection menu and some feature menu, they will create a same AR Session.

Create AR Session Node-by-Node¶

If AR Session presets does not fit your needs, you can also create AR Session Node-by-Node.

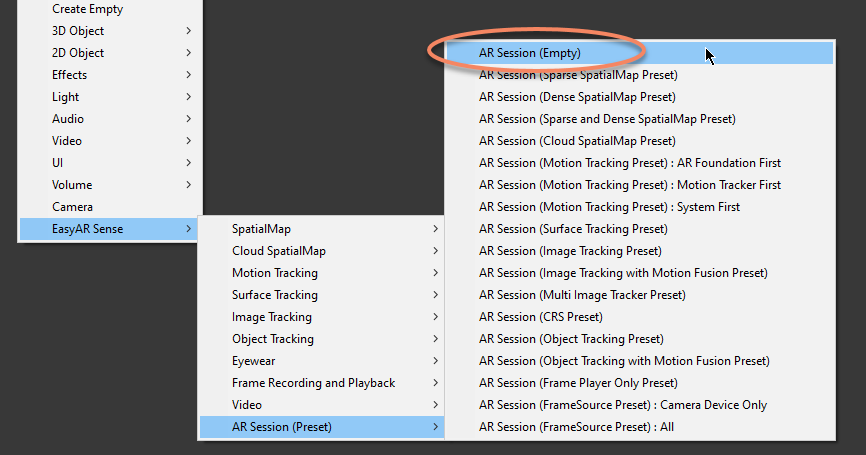

For example, if you want to use Sparse SpatialMap and Image Tracking in the same session, you can first create an empty ARSession by EasyAR Sense > AR Session (Preset) > AR Session (Empty),

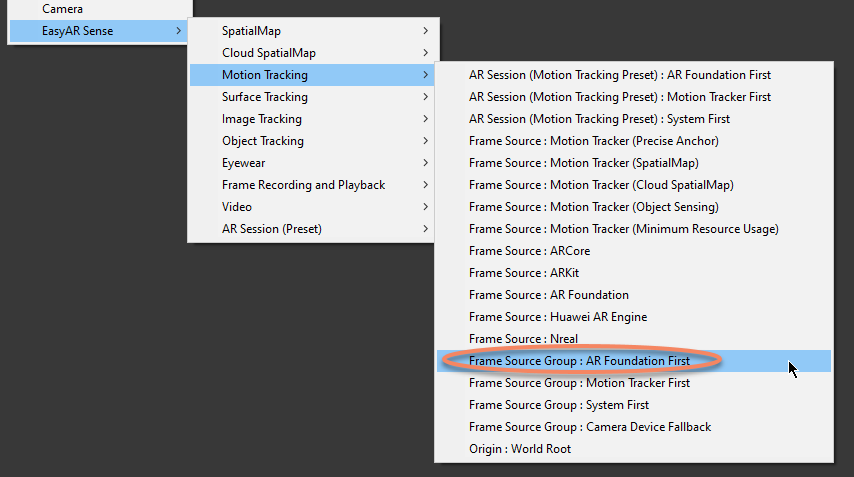

Then add FrameSource in the session. To use Sparse SpatialMap, you will need a FrameSource which represents a motion tracking device, so usually you will need different frame sources on different devices. Here we create a Frame Source Group by EasyAR Sense > Motion Tracking > Frame Source Group : AR Foundation First, the frame source used by the session will be selected at runtime. You can add different frame source groups or only one frame source to the session according to you needs.

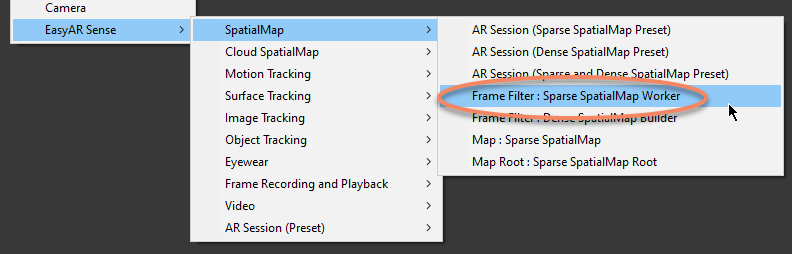

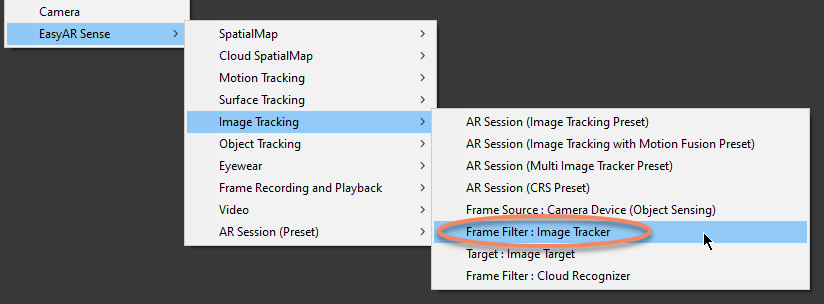

After adding frame source, you need to add frame filters used by the session, to use Sparse SpatialMap and Image Tracking in the same session, you need to add a SparseSpatialMapWorkerFrameFilter and a ImageTrackerFrameFilter in the session, using EasyAR Sense > SpatialMap > Frame Filter : Sparse SpatialMap Worker and EasyAR Sense > Image Tracking > Frame Filter : Image Tracker,

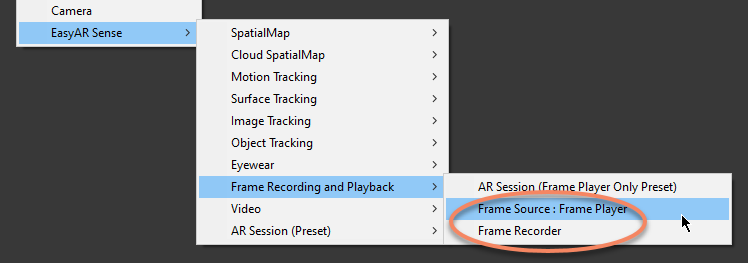

Sometimes you want to record input frames on device and playback on PC for diagnose in Unity Editor, then you can add FramePlayer and FrameRecorder in the session. (You need to change FrameSource.FramePlayer or FrameSource.FrameRecorder to use them.)

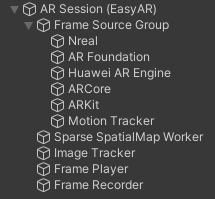

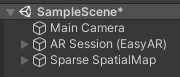

The AR Session will be look like this in the end,

Create Targets or Maps¶

For some features, you will need a target or map in the scene to be parent of other contents, so that contents will keep relative while the target or map moves in the scene.

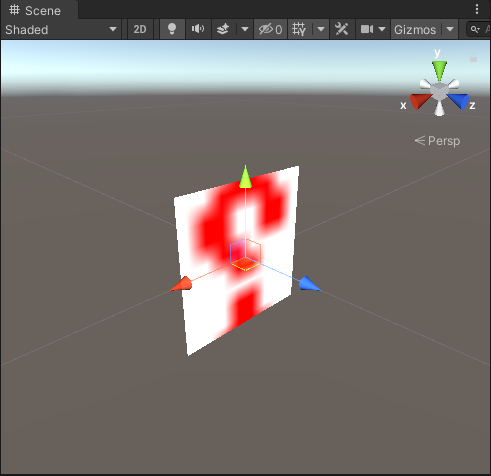

Create ImageTarget¶

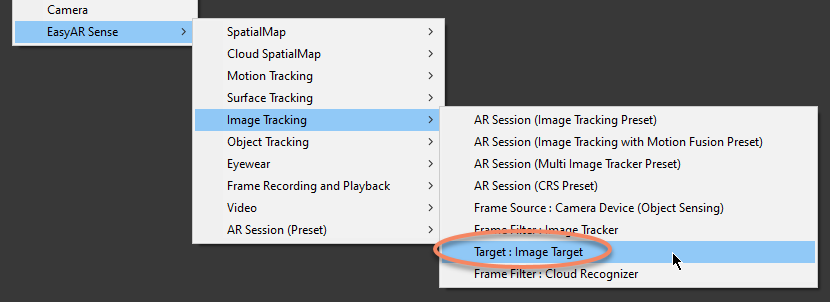

if you want to use Image Tracking, you need to create ImageTargetController by EasyAR Sense > Image Tracking > Target : Image Target

The ImageTarget in the scene will show as question mark after creation.

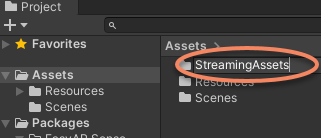

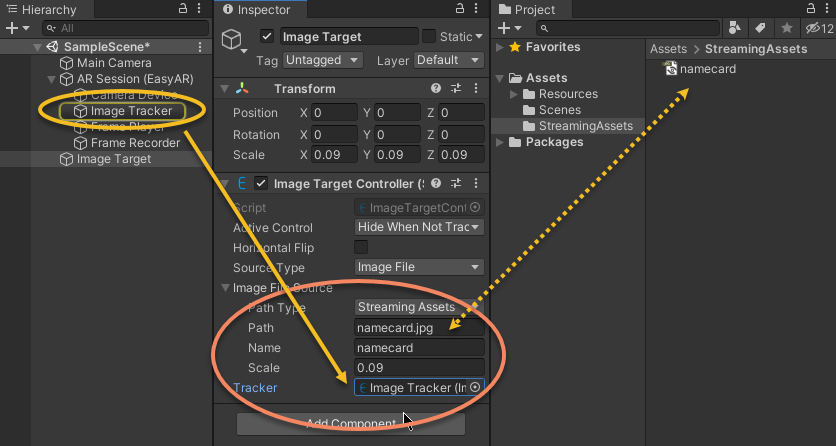

Then you need to configure ImageTarget. There are many ways to do this, and here use one of them, to configure target using StreamingAssets image.

Create StreamingAssets folder in Assets.

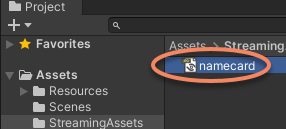

Drag the image for tracking into StreamingAssets. Here we use the name card image.

Then configure the ImageTargetController to use the image in StreamingAssets.

Source Type: Set to Image File here, so the ImageTarget will be created from image files.

Path Type: Set to StreamingAssets here, so the Path will use a path relative to StreamingAssets.

Path: Image path relative to StreamingAssets.

Name: Name of the target, choose a word easy to remember.

Scale: Set according to physical size of the image width in real world. Here the name card width is 9mm in real world, so it is set to 0.09 (m).

Tracker: The ImageTrackerFrameFilter to load ImageTargetController. It will be set to one of the ImageTrackerFrameFilter in the scene when add. It can also be changed after that.

The ImageTarget in the scene will change while typing Path. You have to fill in the correct license key first.

Create Sparse SpatialMap¶

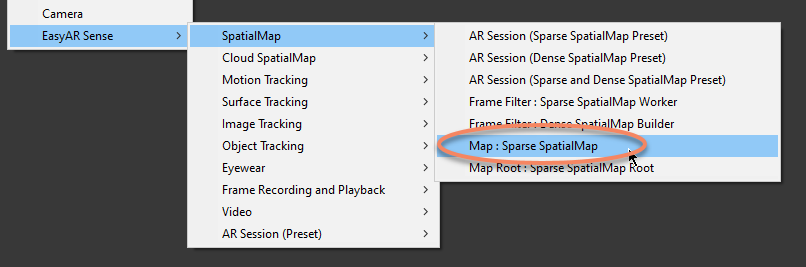

If you want to build Sparse SpatialMap, you need to create SparseSpatialMapController by EasyAR Sense > SpatialMap > Map : Sparse SpatialMap

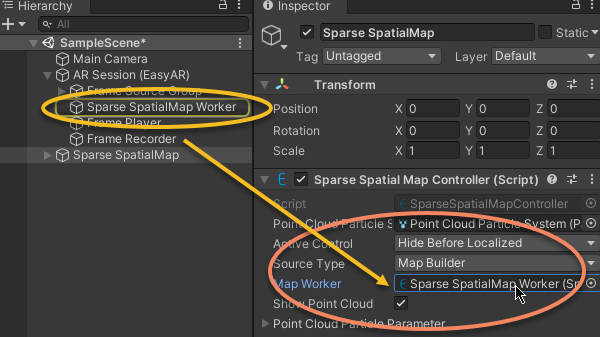

Then configure the SparseSpatialMapController for map building,

Source Type: Set to Map Builder here, so the map is used for map building.

Map Worker: The SparseSpatialMapWorkerFrameFilter to load SparseSpatialMapController. It will be set to one of the SparseSpatialMapWorkerFrameFilter in the scene when add. It can also be changed after that.

Show Point Cloud: Set to True here so the point cloud will be displayed when map is building.

Add 3D Content which Follows Targets or Maps¶

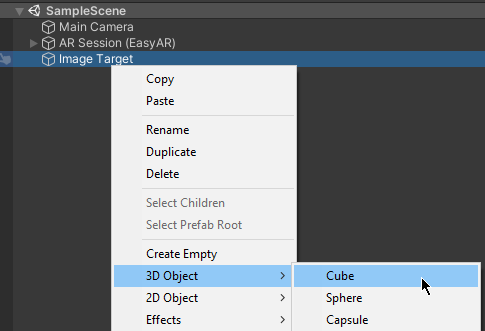

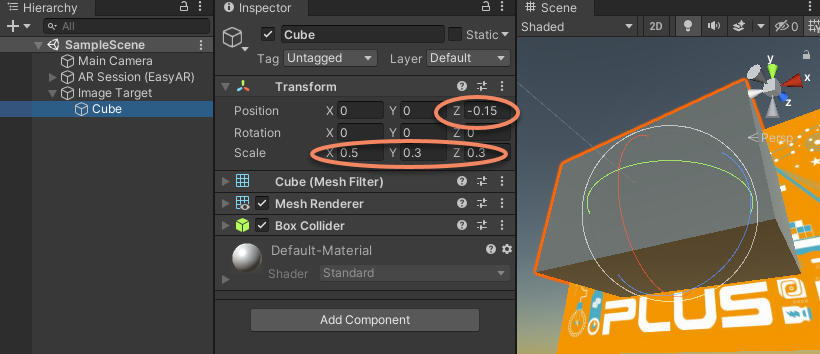

Here we show how to add 3D object under ImageTarget node. We use a Cube as sample.

Scale: Transform can be set to fit the needs. Here we set scale to {0.5, 0.3, 0.3}.

Position: Transform can be set to fit the needs. Here we set z value of position to -0.3 / 2 = -0.15 so that the Cube bottom and image will be aligned.

You can add contents under maps in the same way.

Run in the Editor¶

If a camera is connected to the computer, then the project can run from Unity Editor after the above configurations.

Run on Android or iOS Devices¶

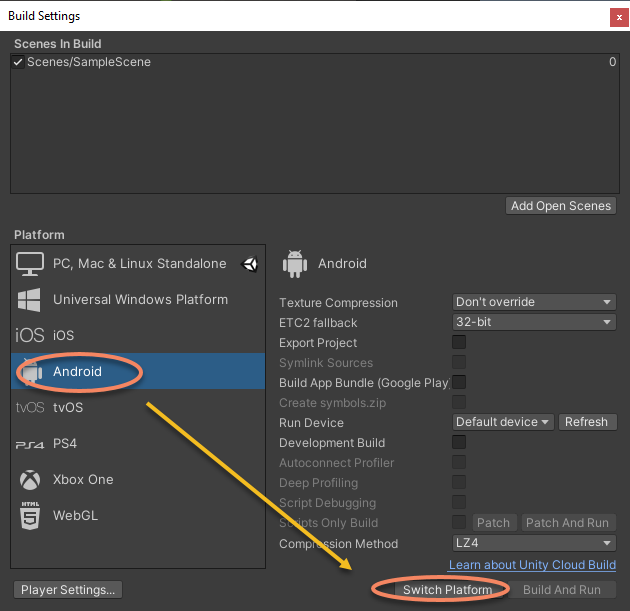

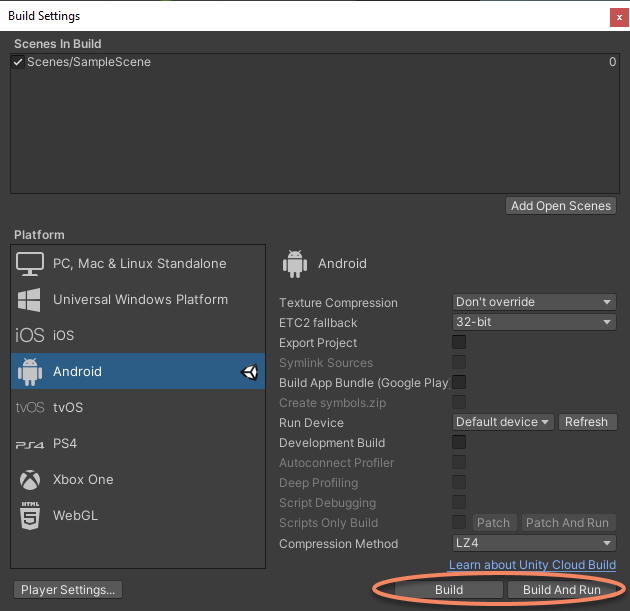

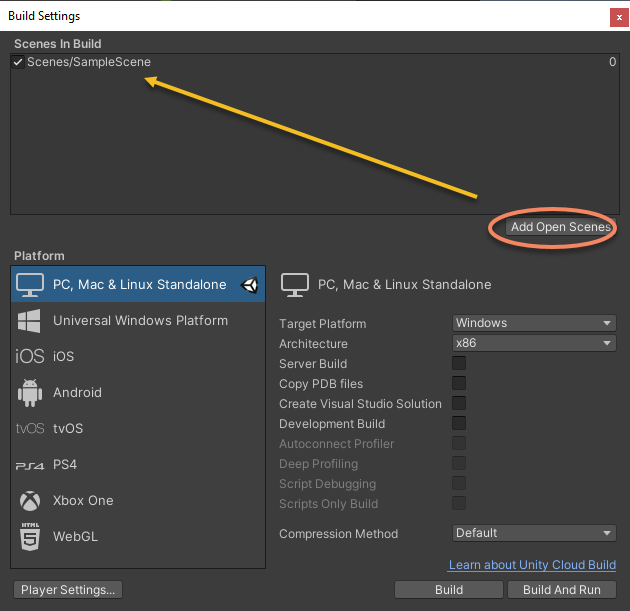

Add your scene into build settings,

Configure the project according to Android Project Configuration or iOS Project Configuration, switch to target platform and then click on the Build or Build And Run button in Build Settings or use other alternatives will compile the project and install the binaries on the phone. Permissions should be granted on the phone when running.